How to combine large CSV files without using Excel

If you are opening large CSV files in Excel, copying it, pasting it to another open Excel file (to be saved as CSV), open another CSV file and repeat, then you’re doing it wrong. It doesn’t matter how powerful your laptop or PC is but opening them in Excel would take time and it’s not a fun experience.

![]()

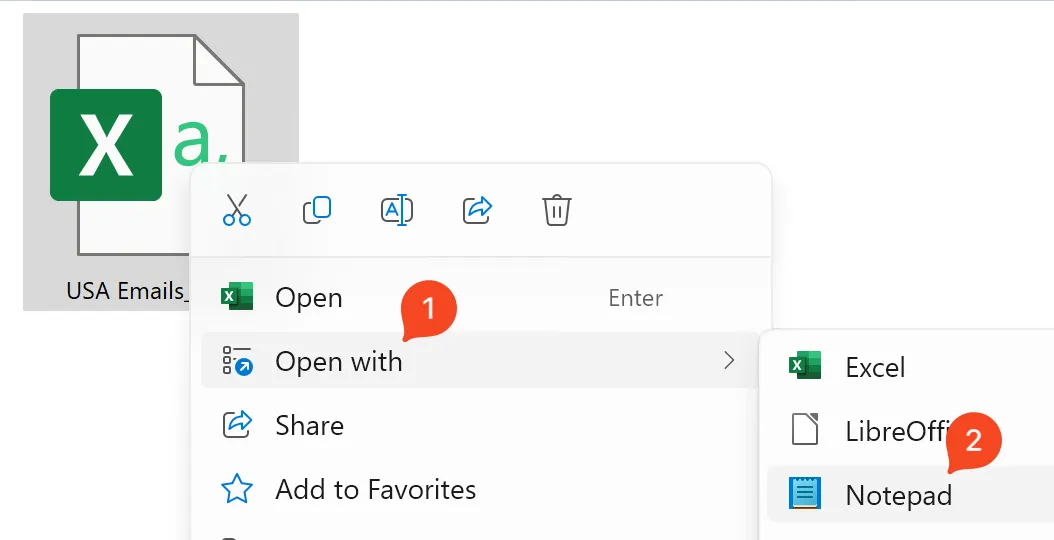

In addition, when you open a CSV file in Excel, you might see something like this.

Ever wondered what was that for? .csv is different from .xlsx. CSV files are just plain text that’s why you can open them in Notepad. Try to open a .xlsx file in a Notepad and you’d just get some random characters. Thus, if you open a CSV file in Excel, Excel will try its best to open and show the file to you but because it’s not a native Excel file, a warning occurs; hence the warning.

Speaking of Notepad, one thing you can do is to open them in Notepad instead and do the copy/pasting there. In this way, you might speed up your copy/pasting and the most important thing is, the data will be preserved as is.

But what if you want to combine CSV files but you want to filter them? Then you still have to open the combined CSV file in Excel right? Supposed that the combined CSV file is 12gb in size, then that would take forever. That’s why you want to filter them first before combining so that the combined CSV file is not that large. Might be just 6gb? But that’s still large!

First, the problem with filtering first then combining is that, what if you need another filter later on? You’d need to do the combining all over again. Second, if we combine first then filter later, opening the combined file (in Excel) would take forever.

Our solution: use a database. Now, hear me out. Databases might be a little bit complicated but here, the database we’d use is simple to use and it’s just a file. You can copy it to a flash drive and move it anywhere you want. It doesn’t need a fancy setup to use. This database is called SQLite.

To proceed, you need to have Python installed on your system. Follow this for the installation.

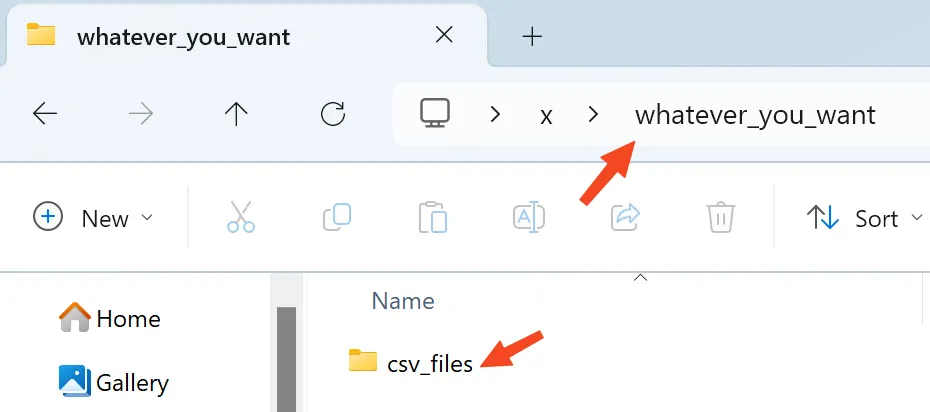

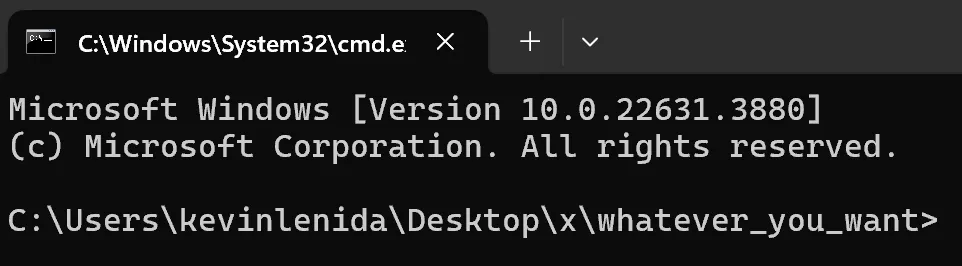

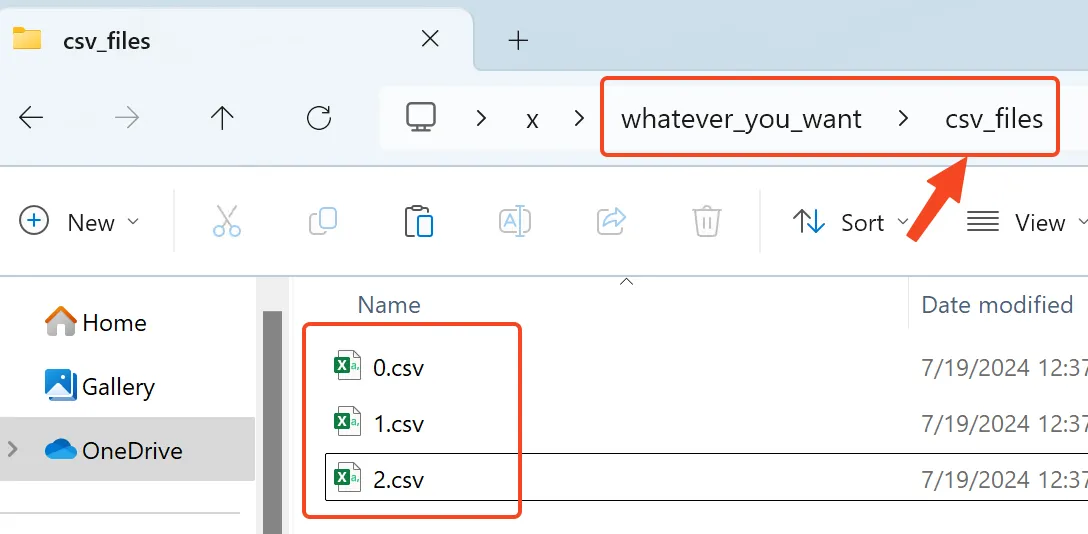

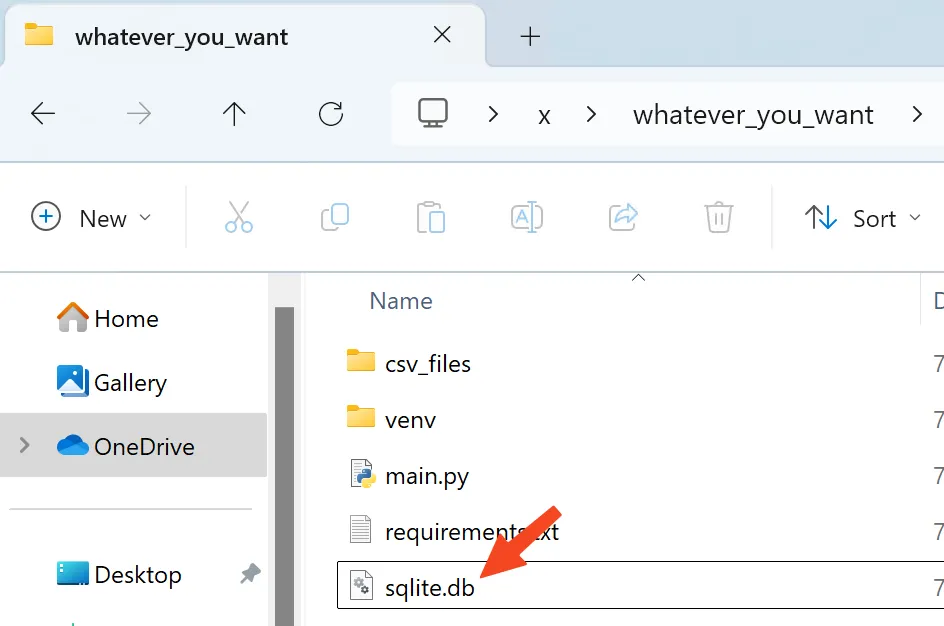

Once Python is installed, create a folder somewhere (name it whatever you want) and within it, created a folder named csv_files.

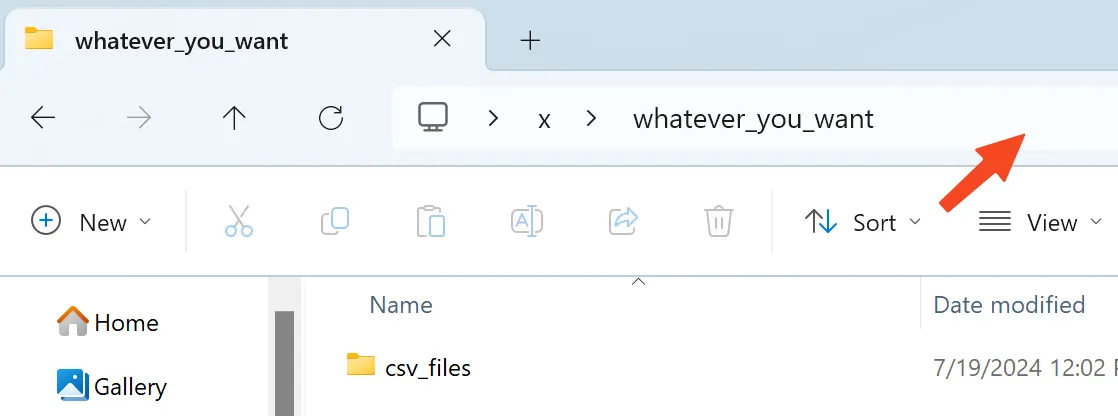

Click the empty space beside the folder path above.

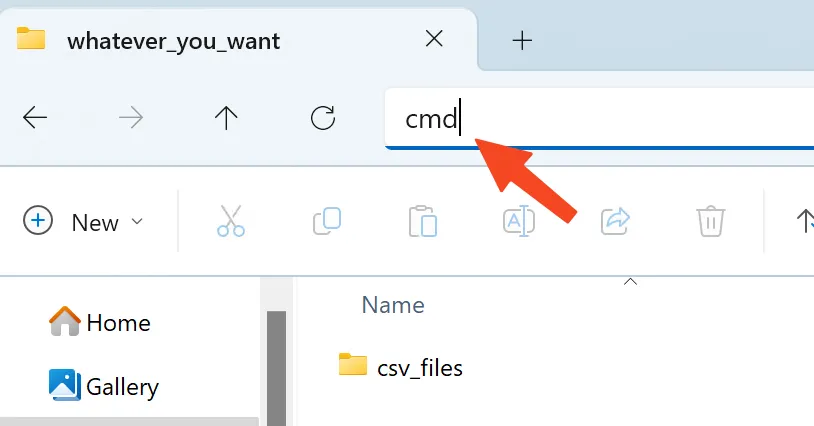

Type cmd and press Enter.

Command Prompt will open.

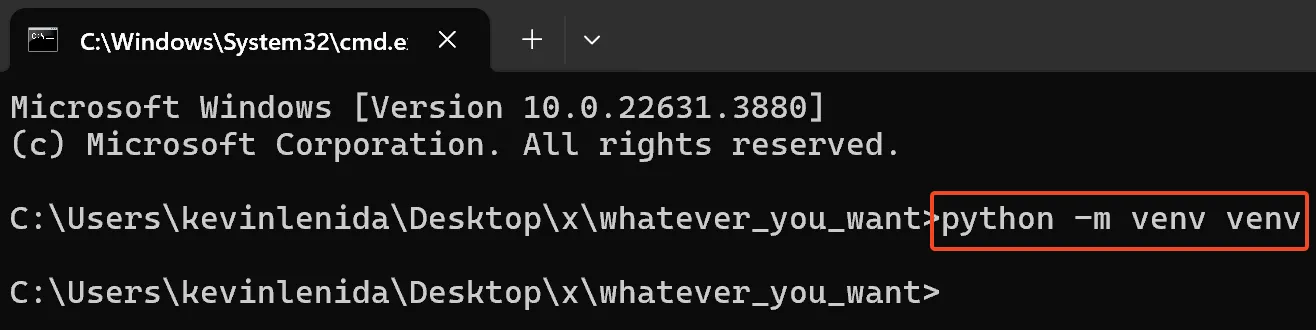

Enter the following command in the Command Prompt then press Enter. This is to create a Python virtual environment.

python -m venv venv

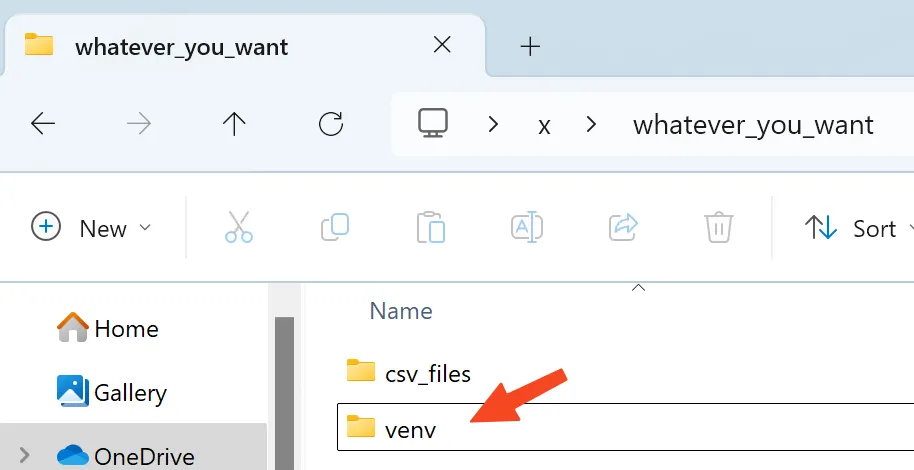

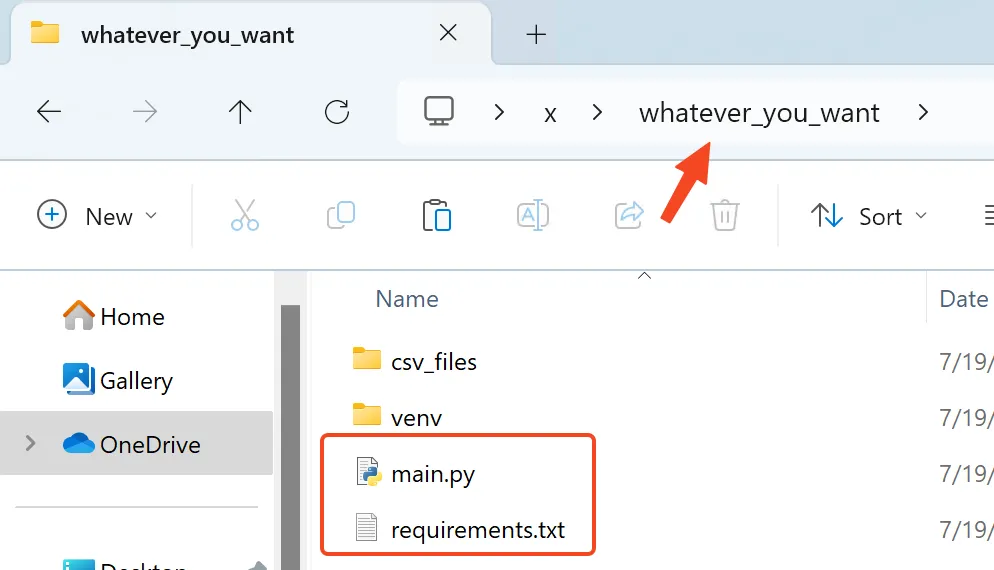

Wait for it to finish. Once finished, a new folder named venv is created.

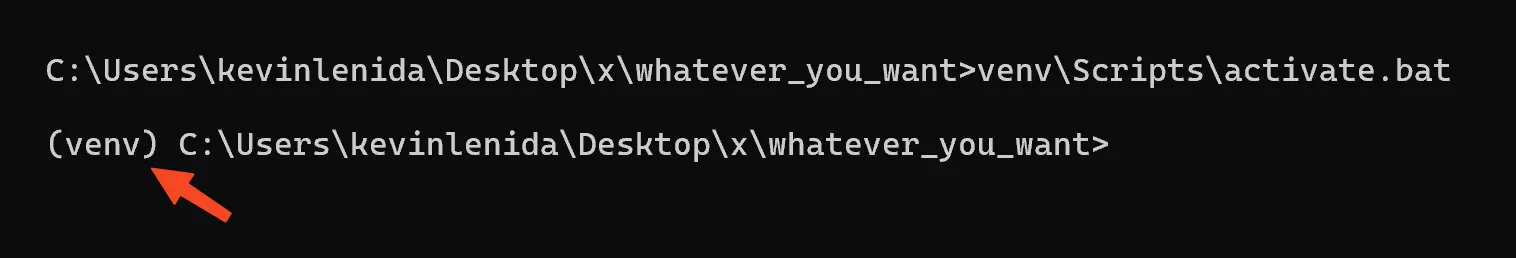

Now, to activate the virtual environment, run the following command:

venv\Scripts\activate.bat

Once activated, you’d see (venv) on the left side.

Now, if you haven’t put all the CSV files you want to combine in the csv_files folder, now’s the time. (You don’t have to name your CSV files like this).

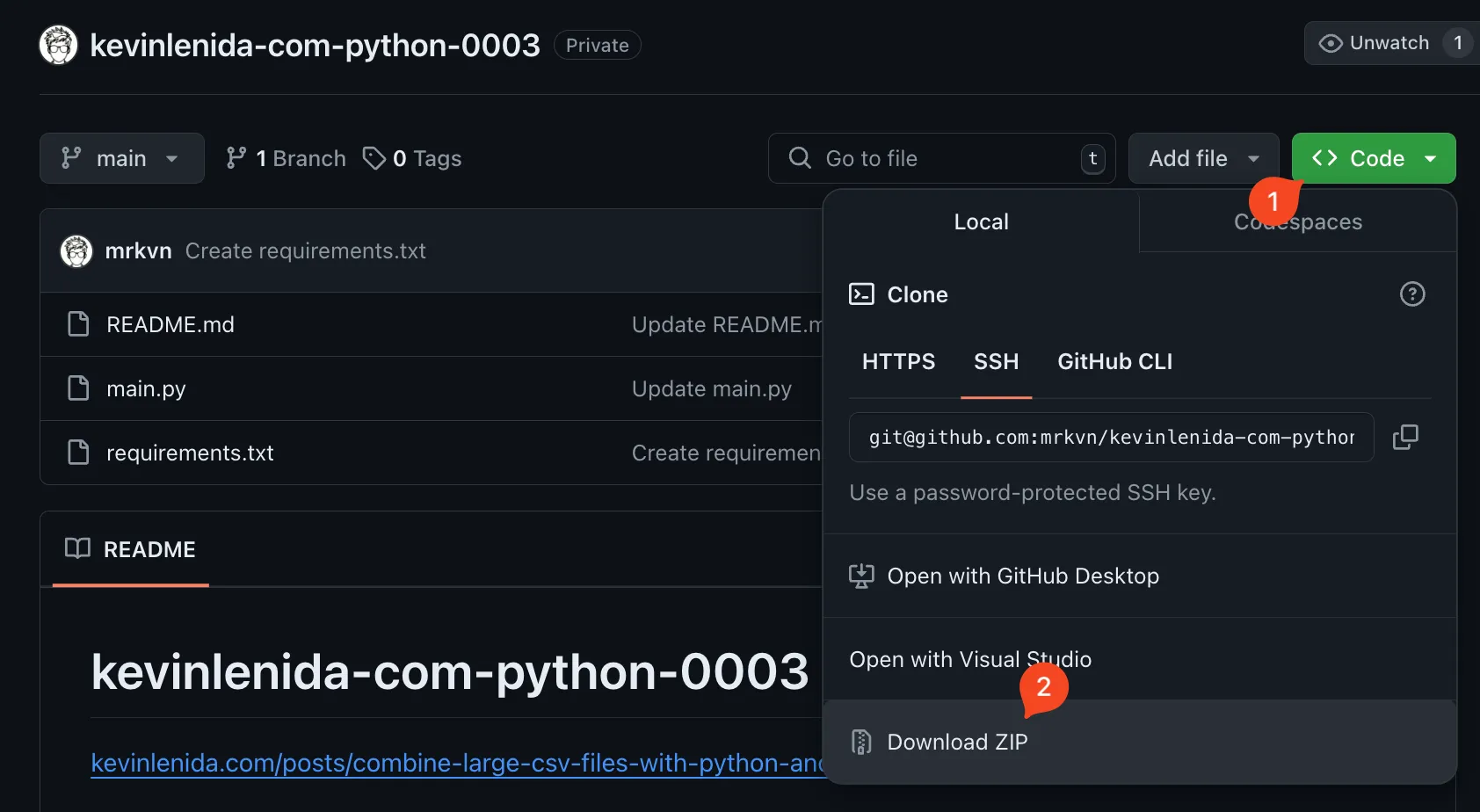

Go to this Github link to download the files we need.

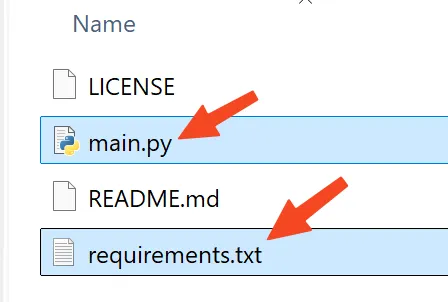

Once downloaded, open the zip file and copy these two files.

Paste them to your folder.

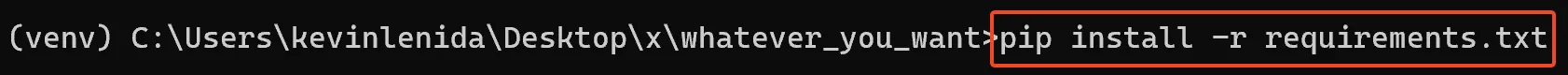

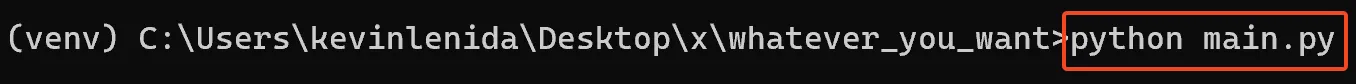

Run this command to install the requirements.

pip install -r requirements.txt

You’d see something like this when it runs. Wait for it to finish.

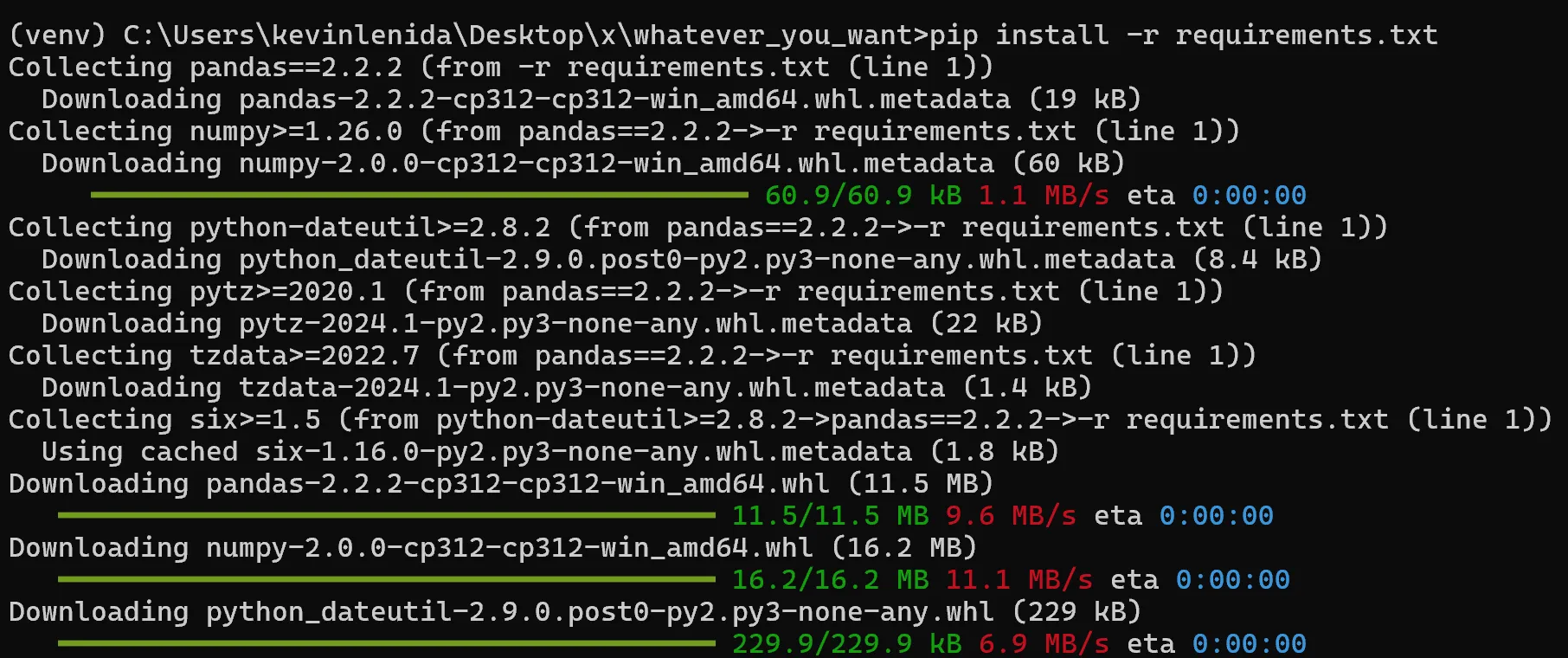

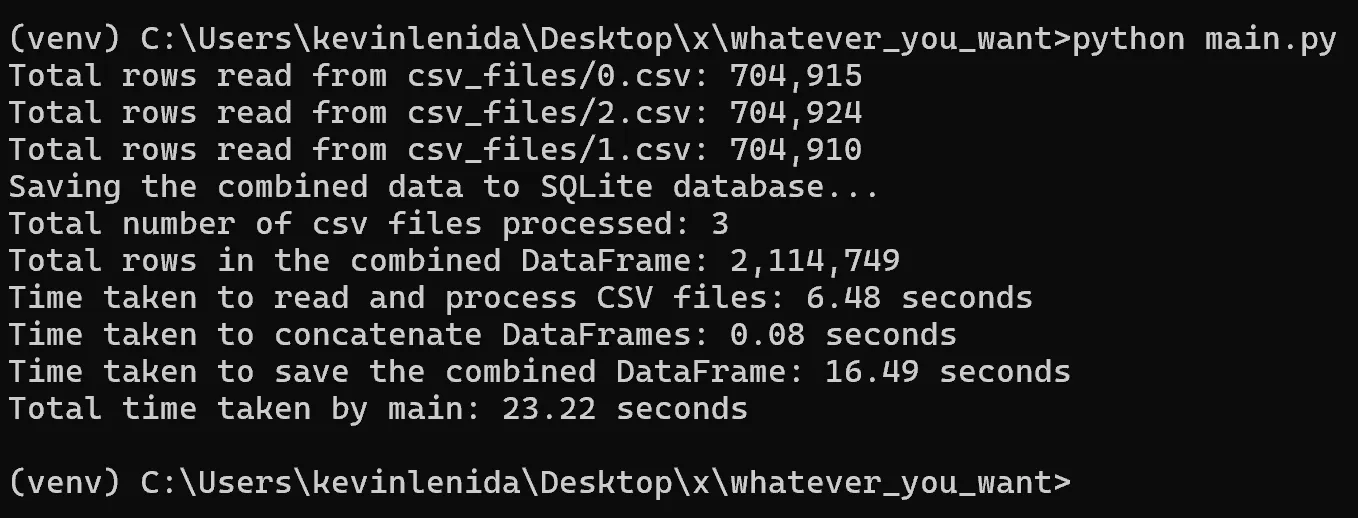

Now, the moment of truth. Run this command to start combining!

python main.py

This is what you’d see when the script is running. (If the script did not run properly, your setup might be wrong or the script just doesn’t work for your CSV files. Lol. The goal is for it to work on any CSV file so if it doesn’t, let me know so that we can fix it :D)

Once finished, you’d see a new file named sqlite.db.

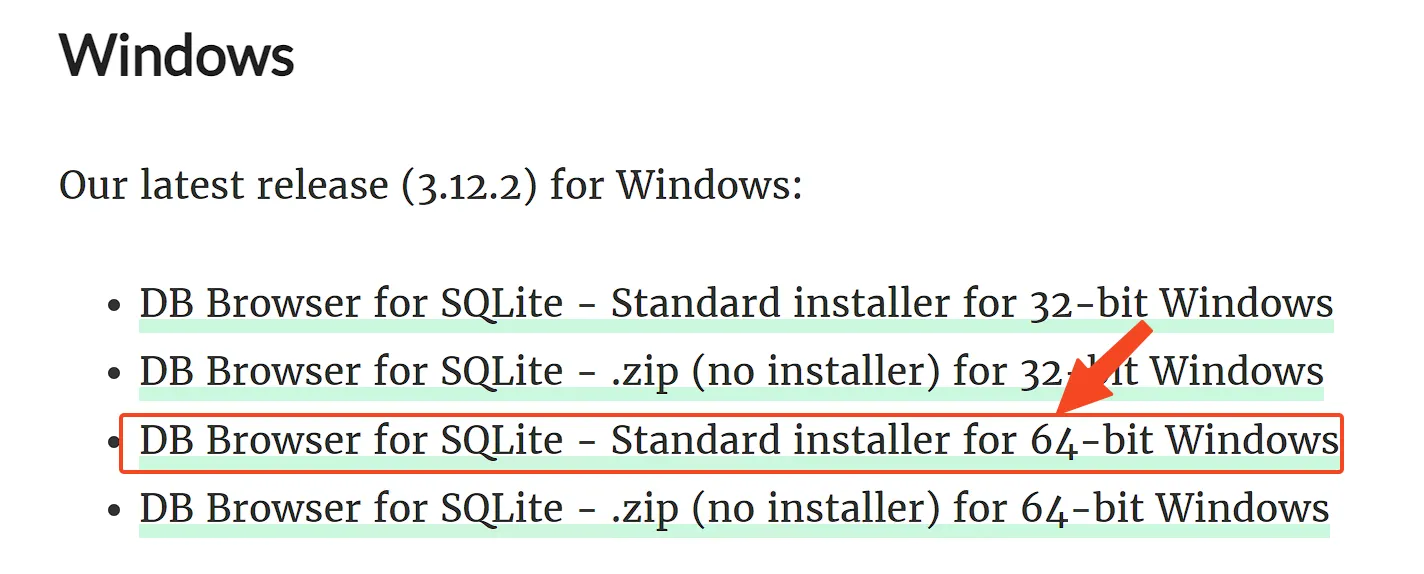

To navigate the data within this file, we need to install DB Browser for SQLite. Download it from here.

Once downloaded, install it. The installation is pretty straightforward so I won’t be showing it here. Just proceed with the default settings in the installer.

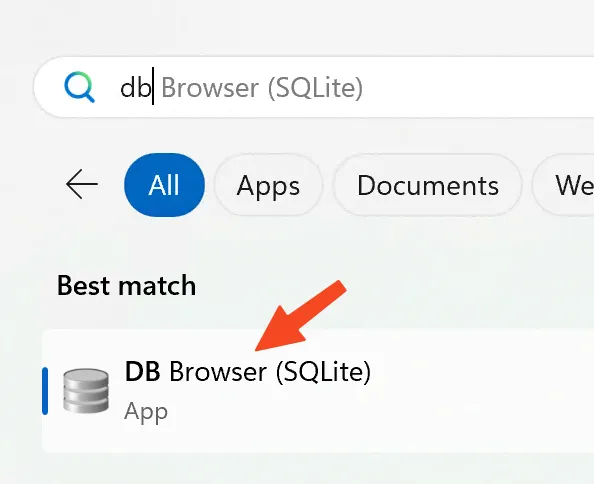

After installation, open it.

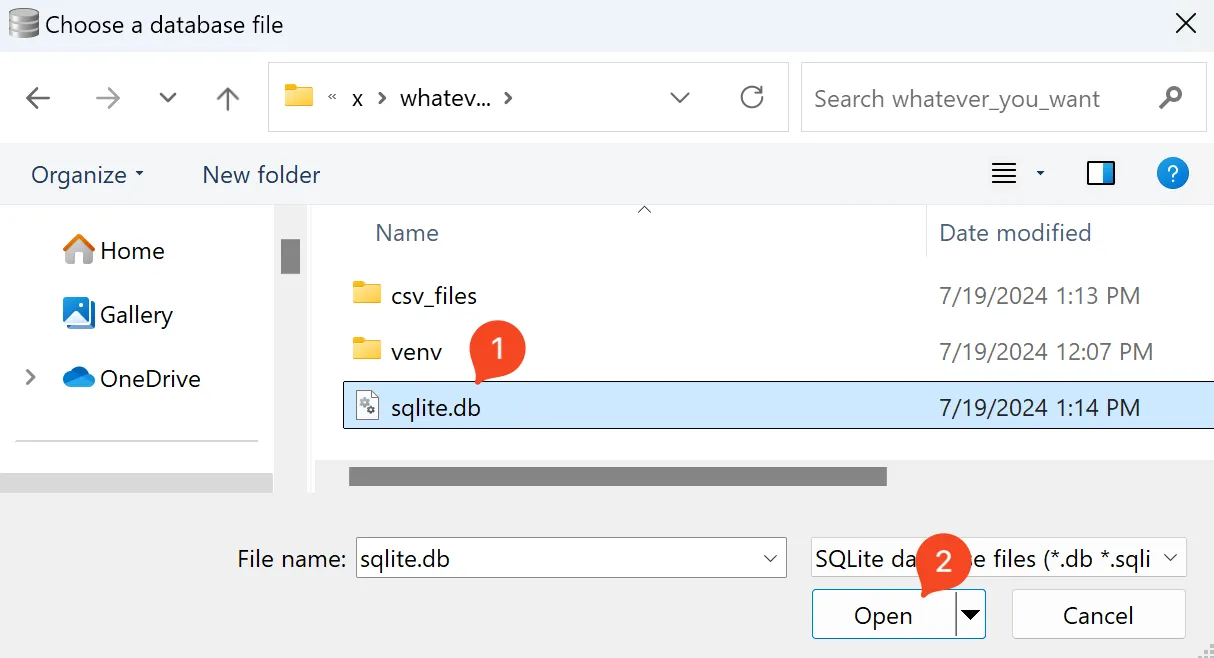

Click Open Database on the top left part, find the sqlite.db and open it.

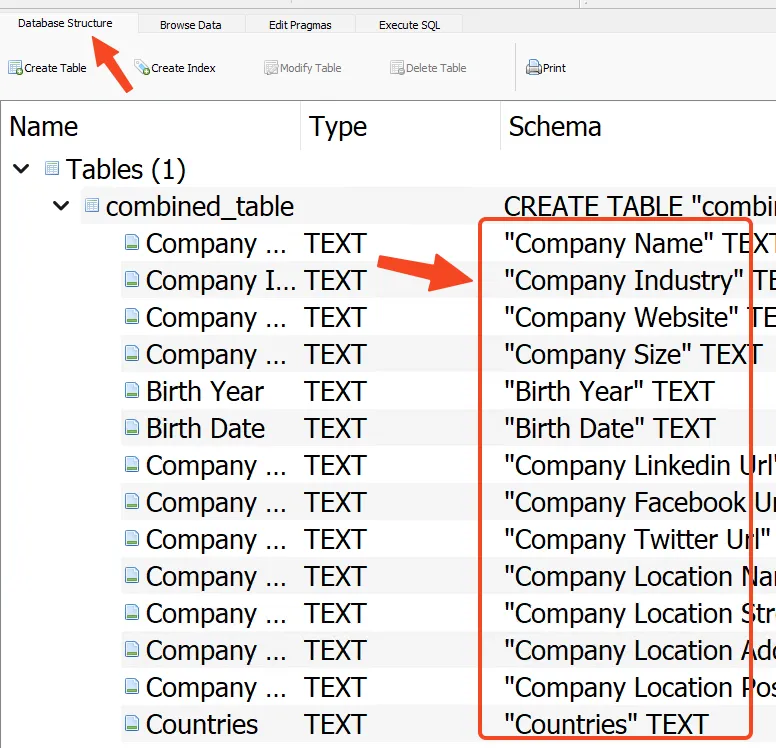

Under the Database Structure tab, you’d see the columns that were created.

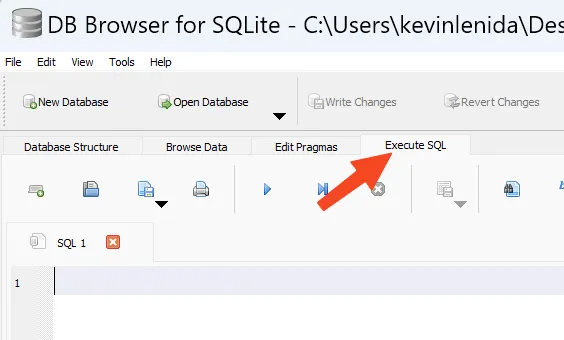

Now, to filter and export to csv the data that you need, go to Execute SQL tab.

Did I mention that you need to know SQL for this? Don’t worry, we don’t need much, at least for a simple filtering. (But still, learning SQL would go a long way so I recommend you do that)

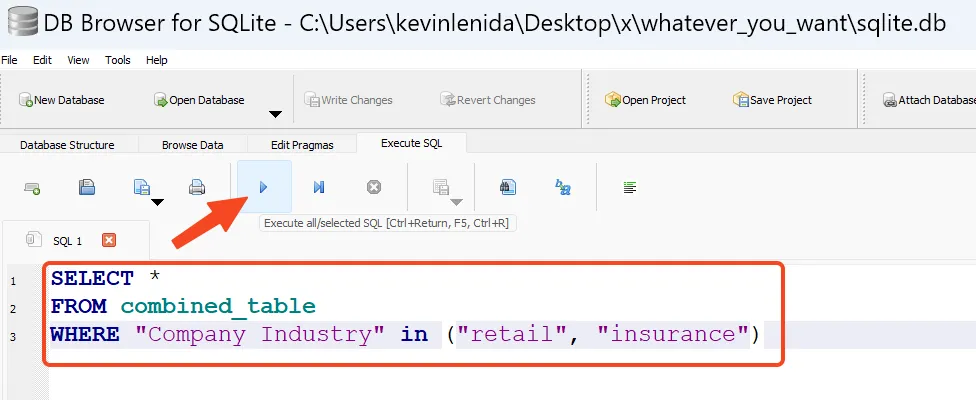

Here is a sample SQL Query to get all columns, with Company Industry that is either retail or insurance. Take note, this only works for my particular data. You need to write your own SQL Query for your own data.

SELECT *

FROM combined_table

WHERE "Company Industry" in ("retail", "insurance")

SQL Explanation:

The SELECT * means, get all columns.

The name of the table that the Python script created is called combined_table. Hence, FROM combined_table.

The last line is to filter the Company Industry column that has a value of retail or insurance.

Click the play button to run the query.

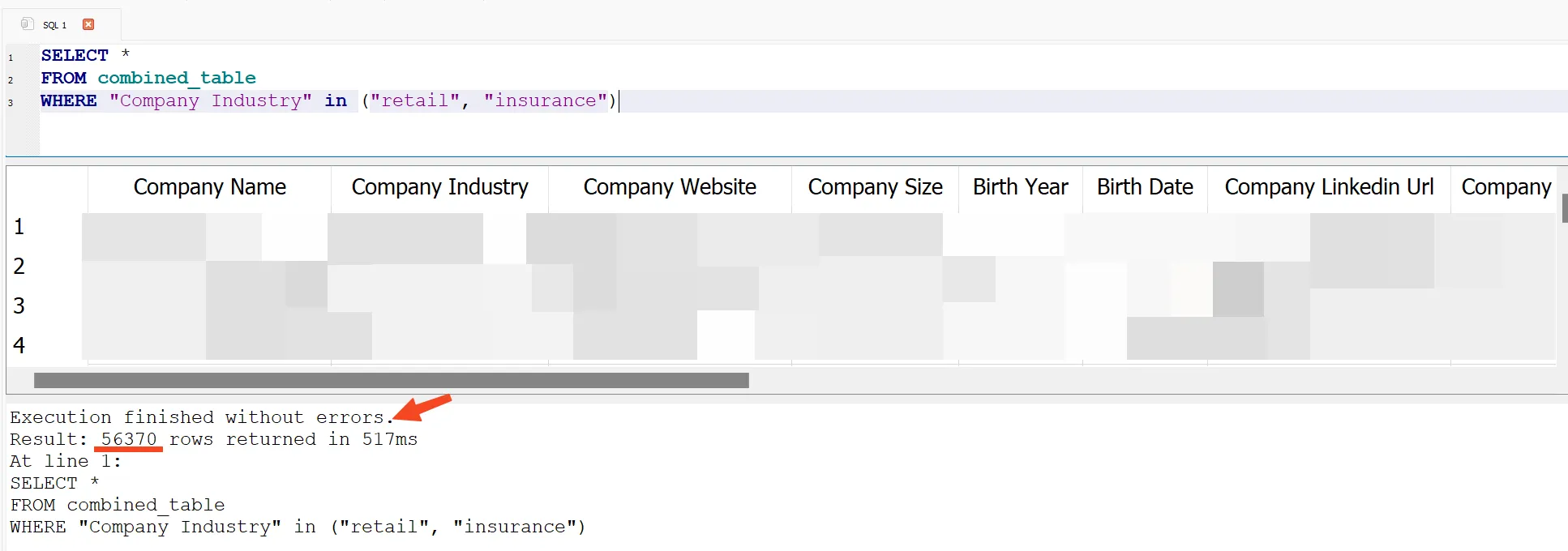

Here are the results. The data is displayed in the blurred portion of the image. At the bottom, you can see that Execution finished without errors. This means that the SQL Query is valid. The number of rows is also displayed.

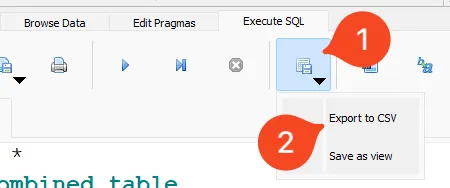

If you are happy with the data, we can now export it to CSV. To export, still under Execute SQL tab, click this icon then Export to CSV.

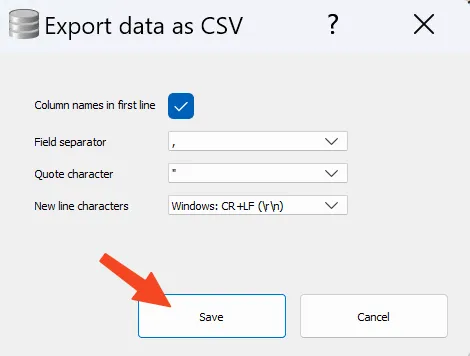

Click Save.

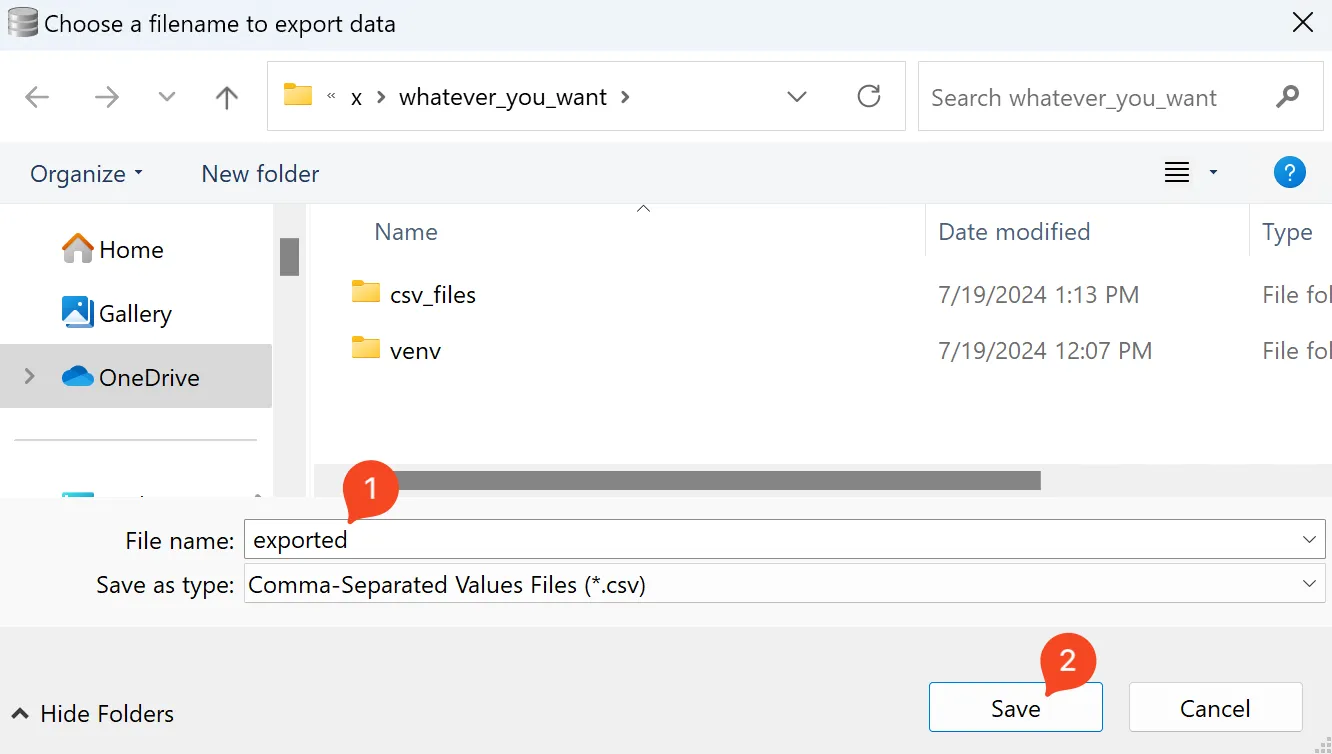

Then save wherever.

And now we’re done. I know it took a lot of effort, especially if this is your first time using Python and SQL but it’s not too bad right? With Python, you just have to install it and run some commands. The code is already provided so it’s not that bad. For SQL, it requires a little bit of SQL knowledge because you need to know how to query your own data. The example query I provided is very simple that it does not showcase much but learning SQL is worth it, especially if you work with large data that a spreadsheet cannot handle much. This use-case is a perfect example.

Again, the sqlite.db file is just a file. You can copy it anywhere. Make a back up of it. Move it to another computer, etc. You don’t need fancy software for SQLite, other than the db browser that we used.

Take note, every time you run the Python script, it will replace the data in the existing sqlite.db so I recommend you make a back up of it before running the script again.